The influence of artificial intelligence (AI) on the field of radiology has substantially increased in the last years. Today, AI can be applied to many aspects of the daily work within a radiology department, from automation of operational tasks and post-scanning image reconstruction to reading and interpreting images. Furthermore, in related medical disciplines, such as radiation oncology imaging research, AI has been applied in organ and lesion segmentation, image registration, fiducial/marker detection, radiomics, etc[1]. Yet, AI consumers still face challenges in effectively implementing AI technologies into their existing IT infrastructure and workflows. At the same time, AI developers encounter difficulties in effectively training AI systems using meaningful data.

Integrating AI into the current radiology workflow - the challenge nobody talks about

Since there is no universally standardized way to perform a radiological read today, straightforward integration of AI into radiology workflows without disrupting the established practices is challenging, to say the least. Typically, radiologists work with two separate systems: PACS for image review and RIS or reporting system for report dictation. Integrating AI into such a workflow would mean adding a third disconnected dimension to the process.

Currently, most AI vendors offer algorithms for computer-aided detection of pathologies that function as black-box tools, where the user can only interact with the input and observe the output without understanding the underlying processes or algorithms used. A typical workflow involves sending medical images to these tools or cloud-based AI systems. Thereon, the AI algorithms generate findings and send screenshots of the results back to PACS, potentially exposing them to all users with PACS access. A different approach to workflow integration comes with a dedicated application that can be started out of PACS and gives the radiologists an option to accept or reject the AI-generated findings. Accepted findings are oftentimes sent to PACS as screenshots, so-called DICOM Secondary Captures.

One significant challenge lies in the fact that the measurements performed by AI, presented as static screenshots, can typically not be further processed and modified by radiologists, thereby limiting the ability to make necessary adjustments or corrections when errors are identified. As the primary goal of AI is to prevent radiologists from overlooking suspicious findings, AI systems tend to err on the side of caution, often resulting in a high incidence of false positives. So, if an AI system generates a substantial number of findings, but the radiologist only agrees with a fraction of them, the radiologist faces the daunting task of explaining the rejection of the additional AI findings in the report. This requires stepping out of the regular reading workflow each time, perhaps even increasing the complexity of an already intricate and lengthy free-text report.

This situation raises concerns regarding the structure and content of the radiological report and how it should be adapted to effectively incorporate the AI findings without significantly interrupting the reporting process and compromising the radiologist's credibility.

Standardized and structured reporting process is key for seamless AI integration

Balancing the integration of AI-generated information with the radiologist's expertise is crucial to ensure a smooth workflow that optimizes the benefits of AI while upholding the radiologist's role as the final decision-maker in the diagnostic process. In this regard, a standardized and structured reporting framework, such as the one offered by mint Lesion™, provides a robust foundation for seamlessly integrating AI into radiology workflow.

“With our interactive approach to guide radiologists through the radiological read, we have paved the way for integrating AI into our product and improving the accessibility and usability of both the AI technology and its outcomes. Instead of offering physicians a separate tool, we can directly incorporate AI results into mint Lesion™, the system they perform the read and the documentation of structured reports with. The most significant advantage of mint Lesion™ here is that it enables radiologists to actively engage with AI-generated findings. They can review, dismiss or adjust any finding and contour they disagree with within a single, uninterrupted workflow. Additionally, mint Lesion™ has the potential to integrate multiple AI findings from different sources, offering flexibility and the ability to choose and manage various findings within the platform. There is no need to switch between different systems, allowing for a more streamlined and efficient process and pertaining AI results as structured data points of a structured radiological report.”

Matthias Baumhauer,

Co-Founder and Managing Director of Mint Medical GmbH

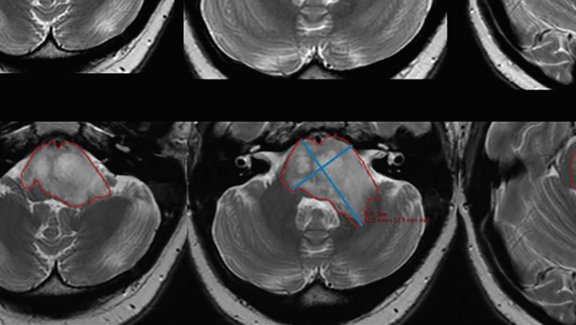

Unlike other platforms that have restricted post-processing of AI-generated findings, mint Lesion™ enables radiologists to fine-tune the contours and make necessary adjustments. Moreover, it equips the reader with contextual information to effectively evaluate AI findings by synchronizing related images and all previous measurements. The system also allows taking over AI results as a basis for further classified and attributed observation. Such operational integration of AI into the read process ensures that relevant and precise AI findings are smoothly incorporated into structured reports, providing comprehensive information to referring physicians or tumor boards.

Issues behind limitations of the modern AI algorithms

As of today, AI products for computer-aided detection of pathologies typically use curated, annotated images for supervised machine learning of AI algorithms. While this approach yields satisfactory results to some extent, it becomes problematic when covering the diverse appearances of a particular kind of pathology. For instance, vascular anomalies or lesions may vary in their visual characteristics, making it arduous for developers to construct a well-balanced training dataset that adequately captures all these different subtypes.

One of the major needs of AI developers, therefore, is high-quality, high-volume, longitudinal data with detailed semantic context per pathology. The availability of comprehensive and diverse datasets is crucial for training robust AI algorithms, but the variations in imaging settings, protocols, and clinical scenarios across healthcare institutions make it difficult to gather standardized data for AI research.

Additionally, there are major complexities and limitations associated with healthcare data collection for AI training and research, particularly within the European Union, where medical images are considered personal data and fall within the scope of GDPR. Striking a balance between privacy regulations and enabling innovative AI development remains a crucial task for researchers and policymakers alike.

AI’s potential beyond contouring

There is a growing recognition that future AI systems should possess a deeper understanding of diseases beyond mere measurements, contours, and images. Training AI in this regard involves more than just exposing it to a set of annotated images. To truly harness the capabilities of AI in providing accurate measurements and valuable assistance, it must learn and comprehend the potential outcomes of a radiological report and its impact on subsequent diagnoses and therapies, such as, for instance, if a tumor infiltrates into a neighboring structure and if this is considered as a pivotal tipping point for eligible therapeutic options. Without knowledge of the therapy context, anatomical details, patient-specific factors, and available treatment options, the AI's capacity to offer effective support remains very limited.

AI of the future should be able to provide answers that take into account the importance of relevant clinical factors. By providing this additional context to the AI, we may create an assistant that not only identifies suspicious findings on an image but can also potentially generate a significant portion of a radiological report.

However, to provide AI with this additional context, the abovementioned challenges must be addressed first, several of which can be effectively mitigated through the use of software like mint Lesion™. Through the standardized and structured collection of image data, mint Lesion™ can provide researchers with high-quality data in the required format, and support thereby the development of more sophisticated AI algorithms.

[1] Tang X. The role of artificial intelligence in medical imaging research. BJR Open. 2019 Nov 28;2(1):20190031. doi: 10.1259/bjro.20190031. PMID: 33178962; PMCID: PMC7594889.